The Linear Classifier#

To illustrate the workflow for training a deep learning model in a supervised manner, this notebook will walk you through the simple case of training a linear classifier to recognize images of cats and dogs. While deep learning might seem intimidating, dont worry. Its conceptual underpinnings are rooted in linear algebra and calculus - if you can perform matrix multiplication and take derivatives you can understand what is happening in a deep learning workflow.

Some code cells will be marked with

##########################

######## To Do ###########

##########################

This indicates that you are being asked to write a piece of code to complete the notebook.

Load packages#

In this cell, we load the python packages we need for this notebook.

import imageio

import skimage

import sklearn.model_selection

import skimage.color

import skimage.transform

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import os

The supervised machine learning workflow#

Recall from class the conceptual workflow for a supervised machine learning project.

First, we create a training dataset, a paired collection of raw data and labels where the labels contain information about the “insight” we wish to extract from the raw data.

Once we have training data, we can then use it to train a model. The model is a mathematical black box - it takes in data and transforms it into an output. The model has some parameters that we can adjust to change how it performs this mapping.

Adjusting these parameters to produce outputs that we want is called training the model. To do this we need two things. First, we need a notion of what we want the output to look like. This notion is captured by a loss function, which compares model outputs and labels and produces a score telling us if the model did a “good” job or not on our given task. By convention, low values of the loss function’s output (e.g. the loss) correspond to good performance and high values to bad performance. We also need an optimization algorithm, which is a set of rules for how to adjust the model parameters to reduce the loss

Using the training data, loss function, and optimization algorithm, we can then train the model

Once the model is trained, we need to evaluate its performance to see how well it performs and what kinds of mistakes it makes. We can also perform this kind of monitoring during training (this is actually a standard practice).

Because this workflow defines the lifecycle of most machine learning projects, this notebook is structured to go over each of these steps while constructing a linear classifier.

Create training data#

The starting point of every machine learning project is data. In this case, we will start with a collection of RGB images of cats and dogs. Each image is a multi-dimensional array with size (128, 128, 1) - the first two dimensions are spatial while the last is a channel dimension (one channel because it is a grey scale image - for an RGB image there would be 3 channels). The dataset that we are working with is a subset of Kaggle’s Dogs vs. Cats dataset.

!wget https://storage.googleapis.com/datasets-spring2021/cats-and-dogs-bw.npz

# Load data from the downloaded npz file

with np.load('cats-and-dogs-bw.npz') as f:

X = f['X']

y = f['y']

print(X.shape, y.shape)

(8192, 128, 128, 1) (8192,)

In the previous cell, you probably observed that there are 4 dimensions rather than the 3 you might have been expecting. This is because while each image is (128, 128, 1), the full dataset has many images. The different images are stacked along the first dimension. The full size of the training images is (# images, 128, 128, 1) - the first dimension is often called the batch dimension.

##########################

######## To Do ###########

##########################

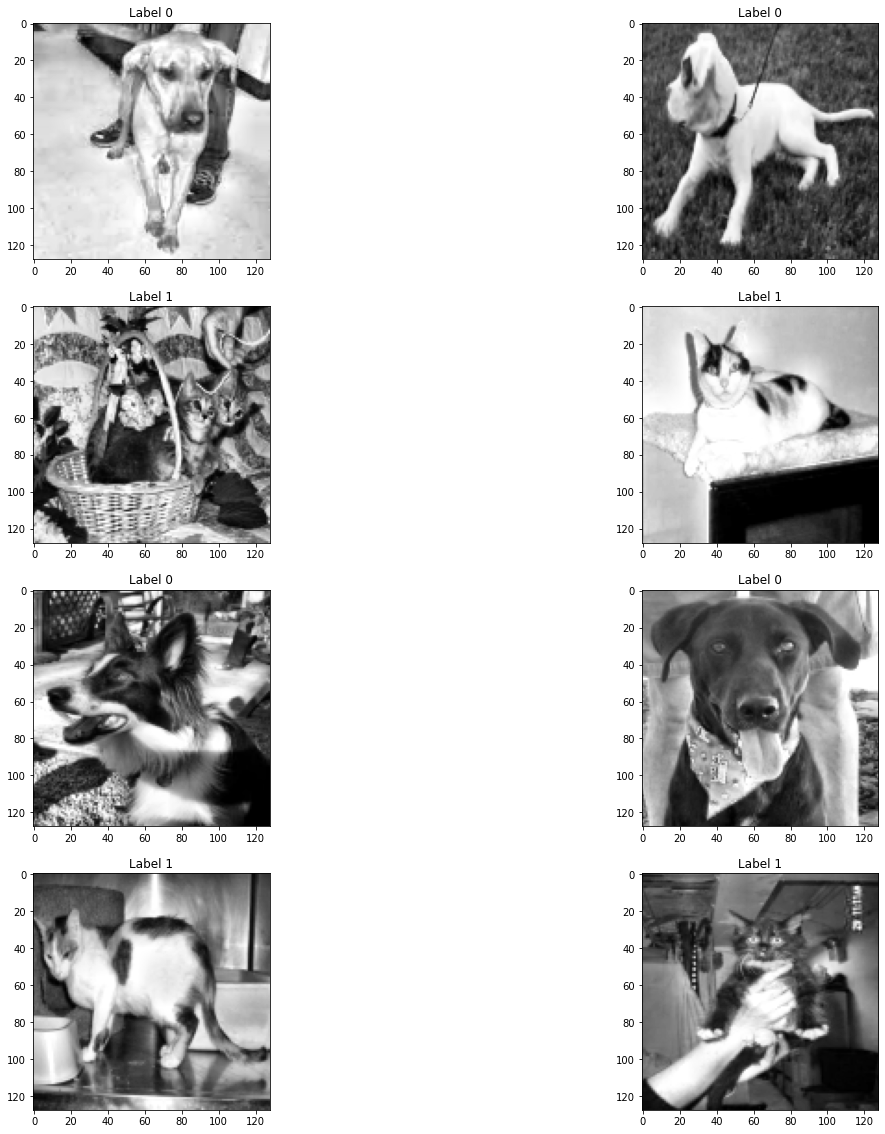

# Use matplotlib to visualze several images randomly drawn from the dataset

# For each image, set the title to be the y label for the image

Show code cell content

fig, axes = plt.subplots(4, 2, figsize=(20,20))

for i in range(8):

axes.flatten()[i].imshow(X[i,...,0], cmap='gray')

axes.flatten()[i].set_title('Label ' + str(y[i]))

For this exercise, we will want to flatten the training data into a vector.

# Flatten the images into vectors of size (# images, 16384, 1)

X = np.reshape(X, (-1, 128*128, 1))

print(X.shape)

(8192, 16384, 1)

Split the training dataset into training, validation, and testing datasets#

How do we know how well our model is doing? A common practice to evaluate models is to evaluate them on splits of the original training dataset. Splitting the data is important, because we want to see how models perform on data that wasn’t used to train them. This splitting practice usually produces 3 splits.

The training dataset used to train the model

A validation dataset used to evaluate the model during training.

A held out testing dataset used to evaluate the final trained version of the model While there is no hard and fast rule, 80%, 10%, 10% splits are a reasonable starting point.

# Split the dataset into training, validation, and testing splits

X_train, X_test, y_train, y_test = sklearn.model_selection.train_test_split(X, y, train_size=0.75)

The linear classifier#

The linear classifier produces class scores that are a linear function of the pixel values. Mathematically, this can be written as $\vec{y} = W \vec{x}$, where $\vec{y}$ is the vector of class scores, $W$ is a matrix of weights and $\vec{x}$ is the image vector. The shape of the weights matrix is determined by the number of classes and the length of the image vector. In this case $W$ is 2 by 4096. Our learning task is to find a set of weights that maximize our performance on our classification task. We will solve this task by doing the following steps

Randomly initializing a set of weights

Defining a loss function that measures our performance on the classification task

Use stochastic gradient descent to find “optimal” weights

Create the matrix of weights#

Properly initializing weights is essential for getting deep learning methods to work correctly. The two most common initialization methods you’ll see in this class are glorot uniform (also known as Xavier) initialization and he initialization - both papers are worth reading. For this exercise, we will randomly initialize weights by using glorot uniform initialization. In this initialization method, we sample our weights according to the formula

where $n$ is the number of columns in the weight matrix (4096 in our case).

Lets create the linear classifier using object oriented programming, which will help with organization

class LinearClassifier(object):

def __init__(self, image_size=16384):

self.image_size=image_size

# Initialize weights

self._initialize_weights()

def _initialize_weights(self):

##########################

######## To Do ###########

##########################

# Randomly initialize the weights matrix acccording to the glorot uniform initialization

self.W = # Add weights matrix here

Show code cell content

class LinearClassifier(object):

def __init__(self, image_size=16384):

self.image_size=image_size

# Initialize weights

self._initialize_weights()

def _initialize_weights(self):

##########################

######## To Do ###########

##########################

# Randomly initialize the weights matrix acccording to the glorot uniform initialization

self.W = np.random.uniform(low = -1/np.sqrt(self.image_size),

high=1/np.sqrt(self.image_size),

size=(2,self.image_size))

Apply the softmax transform to complete the model outputs#

Our LinearClassifier class needs a method to perform predictions - which in our case is performing matrix multiplication and then applying the softmax transform. Recall from class that the softmax transform is given by

and provides a convenient way to convert our class scores into probabilities

##########################

######## To Do ###########

##########################

# Complete the predict function below to predict a label y from an input X

# Pay careful attention to the shape of your data at each step

def predict(self, X, epsilon=1e-5):

y = # matrix multiplication

y = # Apply softmax

return y

# Assign methods to class

setattr(LinearClassifier, 'predict', predict)

Show code cell content

##########################

######## To Do ###########

##########################

# Complete the predict function below to predict a label y from an input X

# Pay careful attention to the shape of your data at each step

def predict(self, X, epsilon=1e-5):

y = np.matmul(X[...,0], self.W.T )

# Apply softmax - epsilon added for numerical stability

y = np.exp(y)/np.sum(np.exp(y) + epsilon, axis=-1, keepdims=True)

return y

# Assign methods to class

setattr(LinearClassifier, 'predict', predict)

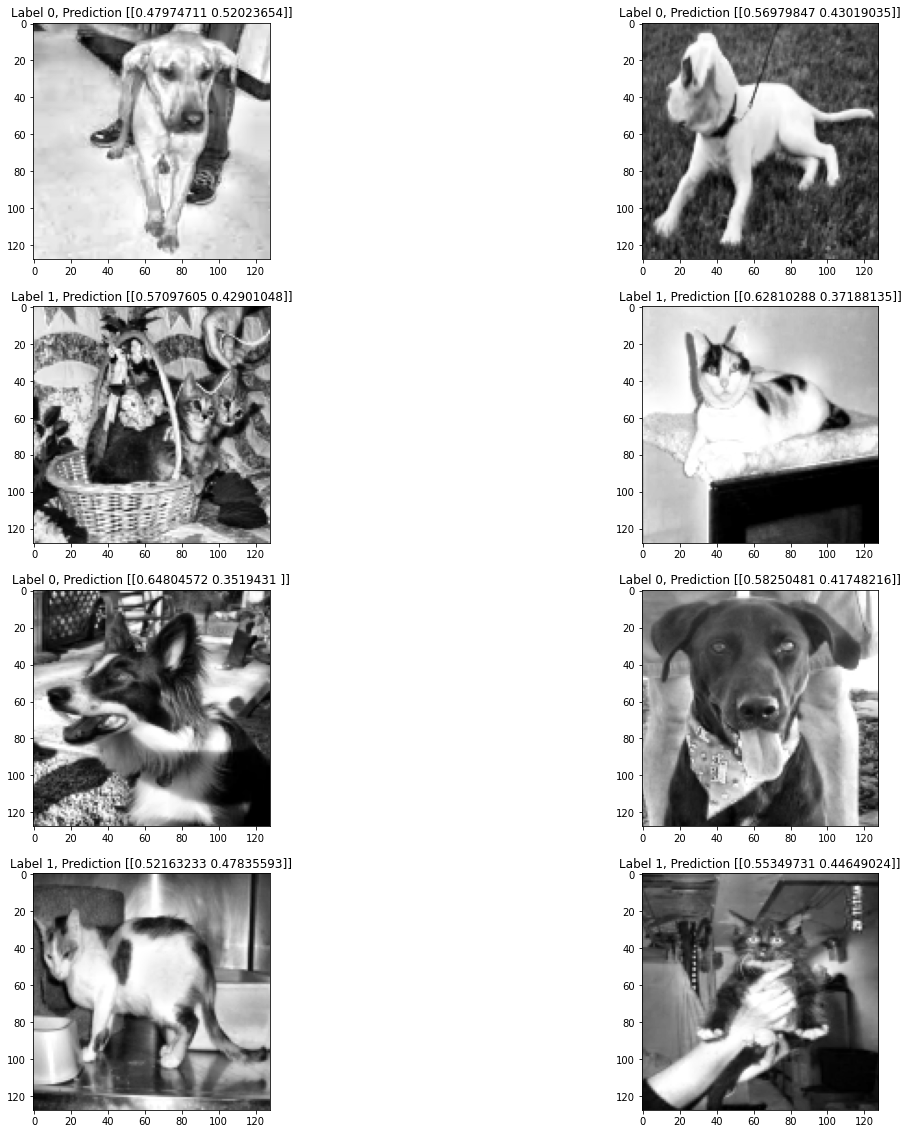

Now lets see what happens when we try to predict the class of images in our training dataset using randomly initialized weights.

lc = LinearClassifier()

fig, axes = plt.subplots(4, 2, figsize=(20,20))

for i in range(8):

# Get an example image

X_sample = X[[i],...]

# Reshape flattened vector to image

X_reshape = np.reshape(X_sample, (128,128))

# Predict the label

y_pred = lc.predict(X_sample)

# Display results

axes.flatten()[i].imshow(X_reshape, cmap='gray')

axes.flatten()[i].set_title('Label ' + str(y[i]) +', Prediction ' + str(y_pred))

What do you notice about the initial results of the model?

Stochastic gradient descent#

To train this model, we will use stochastic gradient descent. In its simplest version, this algorithm consists of the following steps:

Select several images from the training dataset at random

Compute the gradient of the loss function with respect to the weights, given the selected images

Update the weights using the update rule $\Delta W_{ij} \rightarrow \Delta W_{ij} - lr\frac{\partial loss}{\partial W_{ij}}$

Recall that the origin of this update rule is from multivariable calculus - the gradient tells us the direction in which the loss function increases the most. So to minimize the loss function we move in the opposite direction of the gradient.

Also recall from the course notes that for this problem we can compute the gradient analytically. The gradient is given by

where $1$ is an indicator function that is 1 if the statement inside the parentheses is true and 0 if it is false.

def grad(self, X, y):

# Get class probabilities

p = self.predict(X)

# Compute class 0 gradients

temp_0 = np.expand_dims(p[...,0] - (1-y), axis=-1)

grad_0 = temp_0 * X[...,0]

# Compute class 1 gradients

temp_1 = np.expand_dims(p[...,1] - y, axis=-1)

grad_1 = temp_1 * X[...,0]

gradient = np.stack([grad_0, grad_1], axis=1)

return gradient

def loss(self, X, y_true):

y_pred = self.predict(X)

# Convert y_true to one hot

y_true = np.stack([y_true, 1-y_true], axis=-1)

loss = np.mean(-y_true * np.log(y_pred))

return loss

def fit(self, X_train, y_train, n_epochs, batch_size=1, learning_rate=1e-5):

# Iterate over epochs

for epoch in range(n_epochs):

n_batches = np.int(np.floor(X_train.shape[0] / batch_size))

# Generate random index

index = np.arange(X_train.shape[0])

np.random.shuffle(index)

# Iterate over batches

loss_list = []

for batch in range(n_batches):

beg = batch*batch_size

end = (batch+1)*batch_size if (batch+1)*batch_size < X_train.shape[0] else -1

X_batch = X_train[beg:end]

y_batch = y_train[beg:end]

# Compute the loss

loss = self.loss(X_batch, y_batch)

loss_list.append(loss)

# Compute the gradient

gradient = self.grad(X_batch, y_batch)

# Compute the mean gradient over all the example images

gradient = np.mean(gradient, axis=0, keepdims=False)

# Update the weights

self.W -= learning_rate * gradient

return loss_list

# Assign methods to class

setattr(LinearClassifier, 'grad', grad)

setattr(LinearClassifier, 'loss', loss)

setattr(LinearClassifier, 'fit', fit)

lc = LinearClassifier()

loss = lc.fit(X_train, y_train, n_epochs=10, batch_size=16)

Evaluate the model#

Benchmarking performance is a critical part of the model development process. For this problem, we will use 3 different benchmarks

Recall: the fraction of positive examples detected by a model. Mathematically, for a two-class classification problem, recall is calculated as (True positives)/(True positives + False negatives).

Precision: the percentage of positive predictions from a model that are true. Mathematically, for a two-class prediction problem, precision is calculated as (True positives)/(True positives + False positives).

F1 score: The harmonic mean between the recall and precision

We will evaluate these metrics on both the training dataset (the examples used during training) and our testing dataset (the examples that we held out). We can also use a confusion matrix to visualize the prediction results.

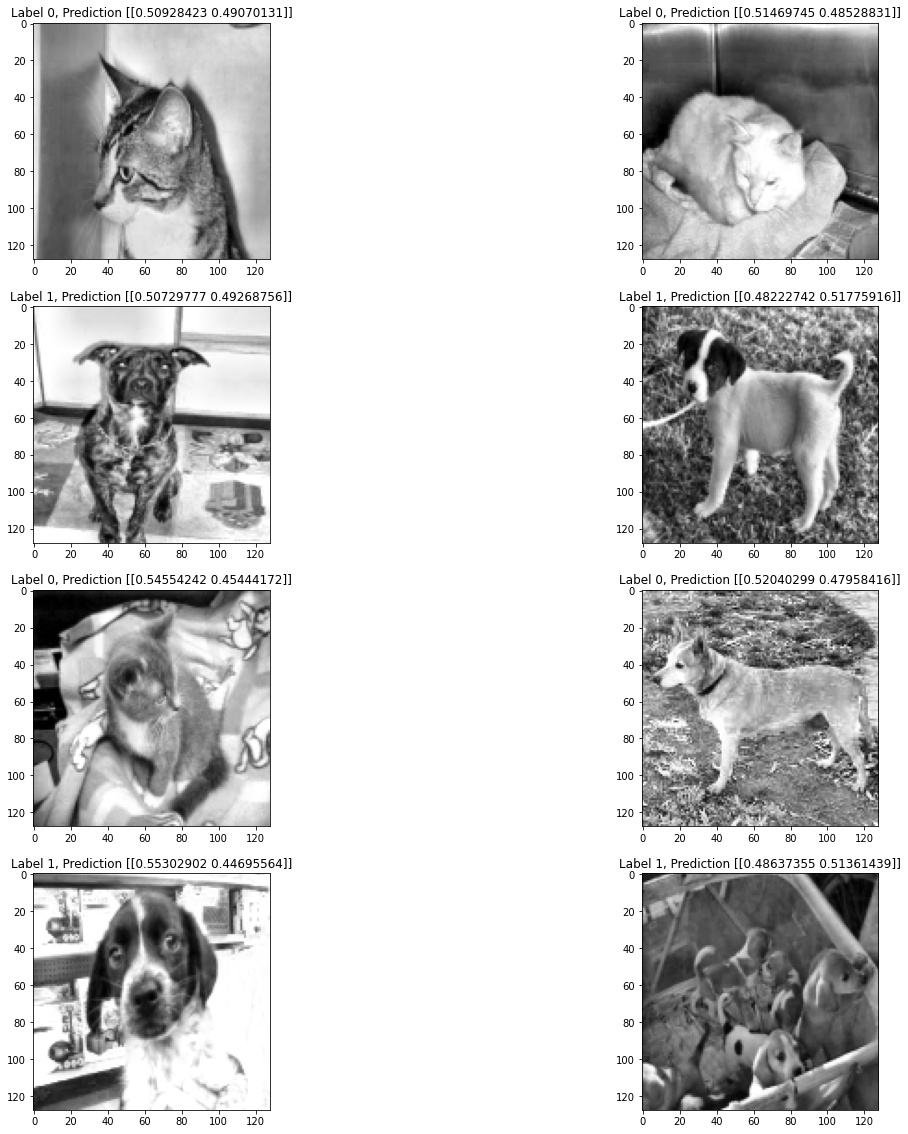

# Visualize some predictions

fig, axes = plt.subplots(4, 2, figsize=(20,20))

for i in range(8):

# Get an example image

X_sample = X_test[[i],...]

# Reshape flattened vector to image

X_reshape = np.reshape(X_sample, (128,128))

# Predict the label

y_pred = lc.predict(X_sample)

# Display results

axes.flatten()[i].imshow(X_reshape, cmap='gray')

axes.flatten()[i].set_title('Label ' + str(y[i]) +', Prediction ' + str(y_pred))

# Generate predictions

y_pred = lc.predict(X_train)

y_pred = np.argmax(y_pred, axis=-1)

# Compute metrics

recall = sklearn.metrics.recall_score(y_train, y_pred)

precision = sklearn.metrics.precision_score(y_train, y_pred)

f1 = sklearn.metrics.f1_score(y_train, y_pred)

print('Training Recall: {}'.format(recall))

print('Training Precision: {}'.format(precision))

print('Training F1 Score: {}'.format(f1))

# Generate predictions

y_pred = lc.predict(X_test)

y_pred = np.argmax(y_pred, axis=-1)

# Compute metrics

recall = sklearn.metrics.recall_score(y_test, y_pred)

precision = sklearn.metrics.precision_score(y_test, y_pred)

f1 = sklearn.metrics.f1_score(y_test, y_pred)

print('Testing Recall: {}'.format(recall))

print('Testing Precision: {}'.format(precision))

print('Testing F1 Score: {}'.format(f1))

Training Recall: 0.458455522971652

Training Precision: 0.5228539576365663

Training F1 Score: 0.4885416666666666

Testing Recall: 0.4780915287244401

Testing Precision: 0.5190274841437632

Testing F1 Score: 0.49771920932589964

Exercise#

Try running your training algorithm a few times and record the results. What do you note about the overall performance? What about the differences between training runs? What about the difference in performance when evaluated on training data as opposed to validation data?

%load_ext watermark

%watermark -u -d -vm --iversions

Last updated: 2021-04-28

Python implementation: CPython

Python version : 3.7.10

IPython version : 5.5.0

Compiler : GCC 7.5.0

OS : Linux

Release : 4.19.112+

Machine : x86_64

Processor : x86_64

CPU cores : 2

Architecture: 64bit

sklearn : 0.0

numpy : 1.19.5

matplotlib: 3.2.2

skimage : 0.16.2

pandas : 1.1.5

imageio : 2.4.1

IPython : 5.5.0